Mixed Reality (XR) technology has progressed rapidly in recent years, leading to a wide range of new use cases. In this state of the art, we will begin with a definition of virtual and augmented reality, then take a tour of the various application domains in driving simulation and their technologies, and finally discuss the different obstacles that still need to be overcome.

1. Definitions

Virtual reality can be defined as a technology that simulates a three-dimensional environment using computer technology, characterized by the experience of being present in a virtual environment while believing to be in a real environment. It is to be distinguished from augmented reality, which is the experience of the real world enriched by virtual entities. (Berthoz et al., 2009)

Virtual environments are created through computer programs. The creation of a realistic environment in virtual reality relies on three fundamental pillars: interaction, presence, and immersion. These pillars are interconnected, interdependent, and crucial for delivering an optimal virtual reality experience. (Ismail et al., 2019)

Interactions, involving both the response of the virtual environment to user actions and vice versa, play a crucial role in achieving good immersion. These interactions can manifest as sensory or physical movements that respect the simulated space. The computer system considers these inputs, analyzes them, and updates the environment accordingly, fostering a dynamic feedback loop between the user and the virtual environment.

Immersion is a psychological state where the subject ceases to realize his own physical state. In our use case, the user no longer takes the real world into account in favor of the virtual world.

Lastly, the feeling of presence defines the subjective feeling of being physically present in the virtual world. In VR, it translates into the feeling of being present in this virtual environment, of being able to interact with it and of being aware of the scope of one’s actions.

2. Application domains

Mixed reality offers new opportunities for different sectors in the automotive industry. They are numerous and varied: formation, design validation, commerce… In this first part, we will see the major activities using this technology.

2.1. Training

Learning and mixed reality are being combined in numerous applications, offering significant benefits to companies. A major advantage is the ability to train employees without the limitations of personnel availability, equipment availability, scheduling conflicts, or health and safety risks. In fact, virtual reality-based learning has been shown to have even greater advantages than traditional methods.

With just a VR headset, new employees can learn procedures without the need for an instructor or disrupting a product line, and in a wide range of scenarios. Using virtual reality also facilitates better recall than paper-based learning and can be combined with simulators to enhance the learning experience through muscle memory (Mignot et al., 2019). Furthermore, virtual reality can draw attention to important details that may not be as visible in real-world settings (d’Huart, 2001).

2.2. Visualisation

Virtual reality and augmented reality allow showing the operation of a finished product before its production, visualizing installations or phenomena normally hidden to the naked eye, or even visualizing a design in three dimensions without incurring model-making costs. A finished construction project can be visualized in real-scale virtual reality, allowing the identification of possible design, layout, or ergonomics flaws which helps save on additional costs.

Moreover, VR and AR are also effective marketing tools. For example, Dacia recently launched an AR application, “Dacia AR,” that enables potential customers to view a customized car model from the catalog directly in their environment, providing them with a more engaging and immersive experience. Similarly, Audi has recently introduced Holoride, a VR application designed for passengers using a Vive Flow, which reduces car sickness and offers content adapted to a moving vehicle, enhancing the user’s experience (Vitard, 2019).

In addition to these applications, augmented reality enables real-time information to be displayed hands-free to users. This technology eliminates the need for carrying numerous papers or tech devices since it is already mounted on the user. Moreover, in the field of driving simulation, AR allows users to see data directly during the simulation without having to exit the simulator, providing a more efficient and effective experience.

2.3. Design

Among the benefits provided, we can mention design. Virtual reality offers new ways of engineering, enabling designers and engineers to create and manipulate 3D models of their products or designs in a virtual environment, allowing them to explore and test various design iterations before moving to the physical prototyping stage. This can save a significant amount of time and resources by identifying and resolving design issues early on in the process (Traverso, 2015). AR can be used to overlay virtual designs onto the physical environment, giving designers a better sense of scale and proportion and allowing them to see how their designs would look in real-world contexts. Overall, XR technologies can improve collaboration, reduce costs, and speed up the design engineering process by providing designers and engineers with powerful tools for visualizing and refining their designs. Let’s now look at some use cases :

Dassault Systèmes has released Catia 3DEXPERIENCE, a module integrated into its digital design software Catia, to display a 3D model in VR at any stage of the creation process to assess the dimensions and feasibility of the part.

Figure 1 : Dassault Systèmes VR solution

Recently, new software that allows designers to “create” objects from scratch using 3D modeling or sculpting tools directly in virtual reality has arrived. The integration of interactive editing tools allows designers to evaluate alternative solutions in real time and in “virtual full scale” without the need to go back and forth from the computer to the VR room.

2.4. Remote assistance and collaborative work

Virtual reality, especially through CAVEs (Cave Automatic Virtual Environment), allows multiple people to view and interact with a design collaboratively. Furthermore, as remote work becomes increasingly prevalent in today’s companies, virtual reality could bring people together for collaborative experiences regardless of their location and time.

Augmented reality headsets come equipped with sensors, including cameras, which enable virtual assistance directly through the device, assisting users in real-time from their point of view. This allows for better communication and support at all times (Lee et al., 2021)

3. Technologies

Virtual reality, especially through CAVEs (Cave Automatic Virtual Environment), allows multiple people to view and interact with a design collaboratively. Furthermore, as remote work becomes increasingly prevalent in today’s companies, virtual reality could bring people together for collaborative experiences regardless of their location and time.

Augmented reality headsets come equipped with sensors, including cameras, which enable virtual assistance directly through the device, assisting users in real-time from their point of view. This allows for better communication and support at all times (Lee et al., 2021)

In this section, we will see the different technologies that currently exist for virtual and augmented reality.

3.1. Head-Mounted Displays (HMD)

The first functional virtual reality headset was created by Ivan Sutherland in 1968. Since then, this technology has evolved and virtual reality headsets really became mainstream about a decade ago. Their relatively low price and the small amount of space required made them accessible to the general public.

They consist of a pair of glasses, either standalone or connected to a computer equipped with sensors and two Fresnel lenses to reproduce stereoscopy. We will provide an overview of the main virtual reality manufacturers.

Two categories can be distinguished:

– Video pass-through headsets that do not allow seeing through the device directly.

Here are some examples of headset models:

Video pass-through Headsets

| Headset | standalone | Diagonal Field of view | Screen resolution | Refresh rate | Hand Tracking | Eye tracking |

Price below 500 € | ||||||

| Acer OJO 500 | X | 133° | 1440×1440 | 90 Hz | X | X |

| HTC Vive Flow |  | 100° | 1600 x 1600 | 75 Hz | X | X |

| Huawei VR Glass 6DoF | X | 90° | 1600×1600 | 90 Hz | X | X |

| iQIYI Qiyu Dream |  | 97° | 1280×1440 | 72 Hz | X | X |

| Meta quest 2 |  | 134° | 1832×1920 | 120 Hz |  | X |

| Nolo X1 |  | 132° | 1280×1440 | 90 Hz | X | X |

| Oculus Go | X (bluetooth connection to a smartphone) | 127° | 1280×1440 | 60 Hz | X | X |

| Samsung Odyssey+ | X | 146° | 1440×1600 | 90 Hz | X | X |

Price between 500 and 1500 € | ||||||

| HTC Vive XR Elite |  | 109° | 1920×1920 | 90 Hz |  | X |

| HTC Vive Cosmos Elite | X | 97° | 1440×1700 | 90 Hz | X |  |

| HTC Vive Pro 2 | X | 113° | 2160×2160 | 120 Hz | X |  |

| HP Reverb G2 | X | 107° | 2448×2448 | 90 Hz | X | X |

| Lynx R1 |  | 127° | 1600×1600 | 90 Hz |  | X |

| Meta Quest 3 |  | 134° | 2064×2208 | 120 Hz |  | X |

| Meta Quest Pro |  | 111° | 1800×1920 | 90 Hz |  |  |

| Pico 4 Pro |  | 146° | 2160×2160 | 90 Hz |  |  |

| Pico Neo 3 Pro | X | 133° | 1832×1920 | 90 Hz | X | X |

| Playstation VR2 | X (wired connection to a PlayStation 5) | 110° | 2000×2040 | 120 Hz | X |  |

| TCL NXTWEAR V |  | 108° | 2280×2280 | 90 Hz | – | – |

| Valve Index | X | 114° | 1440×1600 | 144 Hz | X | X |

Price higher than 1500 € | ||||||

| Apple Vision Pro |  | – | 4096×4096 | – |  |  |

| HTC Vive Focus 3 | X | 113° | 2448×2448 | 90 Hz | X |  |

| Pimax Reality 12K QLED |  | 240° | 6k | 200 Hz | X |  |

| Somnium Space VR1 |  | 160° | 2880×2880 | 90 Hz |  |  |

| Varjo Aero | X | 121° | 2880×2720 | 90 Hz | X |  |

| Varjo VR-3 | X | 146° | 2880×2720 | 90 Hz |  |  |

– And the “optical see-through” headsets, based on transparent or semi-transparent screens, which allows users to see the real world while also displaying computer-generated graphics or information. This type of technology can be used for augmented reality and mixed reality.

Optical see-through headsets

Headset | standalone | Diagonal Field of view | Screen Resolution | Move Tracking | Eye tracking | Battery life |

Price below 500 € | ||||||

Huawei Vision Glass | X (bluetooth connection to smartphone) | 41° | 1920×1080 | X | X | – |

Nreal Air | X (wired connection to smartphone) | 46° | 1920×1080 | x | x | / |

Rokid Max | X (wired connection to smartphone) | 50° | 1920×1080 | x | x | / |

Viture One | X (wired neckband connection) | 43° | 1920×1080 | X | X | – |

Xiaomi Smart Glasses | ✔ | 29° | 640×480 | X | X | 7h |

Price between 500 and 1500 € | ||||||

Shadow Creator Action One | ✔ | 45° | 1280×720 | ✔ | X | 3.5h |

Digilens Argo | ✔ | 30° | 1280×720 | ✔ | X | 6h |

Lenovo ThinkReality A3 | X (wired connection to a computer) | 47° | 1920×720 | X | X | / |

Oppo Air Glass 2 | ✔ | 28° | – | X | X | 10h |

Vuzix Blade 2 | ✔ | 20° | 540×540 | X | X | 5.5h |

Vuzik Ultralite | ✔ | – | – | X | X | – |

Price higher than 1500 € | ||||||

Magic Leap 2 | ✔ | 70° | 1440×1760 | ✔ | ✔ | 3.5h |

Microsoft Hololens 2 | ✔ | 52° | 2048×2048 | ✔ | X | 2.5h |

Epson Moverio BT-45CS | ✔ | 34° | 1920×1080 | X | X | 8h |

RealWear Navigator 500 | ✔ | 20° (Horizontal) | 854×480 | X | X | 8h |

ThirdEye X2 MR Glasses | ✔ | 42° | 1280×720 | ✔ | X | 2h |

Vuzix M4000 | ✔ | 28° | 854×480 | X | X | 2h |

3.2. Caves

The CAVE (Cave Automatic Virtual Environment) is a technology created by Carolina Cruz-Neira, Daniel J. Sandin, and Thomas A. DeFanti in 1992. Consisting of 3 to 6 walls onto which screens or projectors display a virtual environment, these devices are more expensive and bulky than HMD devices, but they offer greater resolution and processing power while enabling collaborative work.

Reflective spheres are placed on users (often on the glasses), allowing infrared sensors to deduce their positions and adjust the perceived image. The user is therefore not completely immersed as they can still see their body.

There are several CAVE models available worldwide, here are some of them:

France

Blue Lemon, Arts et Métiers Institute of Technology

Chalon-sur-Saône, France

The Blue Lemon, provided by the company Antycip in collaboration with Renault (LiV joint laboratory), is a virtual immersion room with 5 screens of 3.40 meters belonging to the Arts et Métiers Institute of Chalon-sur-Saône.

“The size of each projected pixel is about 1.2 mm (very close to human visual resolution under normal conditions), the maximum luminance is about 25,000 lumens and the contrast is about 2000:1 in active stereoscopy, ensuring excellent viewing conditions. A cluster of computers allows images to be calculated in real-time. A spatialized sound system adds a sound dimension to interactive visualization.”

VR CAVE – CIREVE

Caen, France

Delivered by Antycip, this 4-sided CAVE inaugurated in 2006 is the largest in France with a projected surface of 118 square meters and a total of 16 million pixels. Equipped with 12 tracking cameras and operating with proprietary management software, CryVR, it allows researchers and users at this university to immerse themselves in virtual reality to visualize their designs, data, and information, as well as train collaborators.

https://steantycip.com/projects/vr-cave-caen/

Tore VR Cave

Lille, France

The University of Lille acquired a Cave with a unique curved screen in the shape of a half-sphere in 2017. TORE (The Open Reality Experience) has a surface area of 4 by 8 meters and has this specific shape that differs from the usual cube designs to better render virtual environments with a constant user-to-screen distance. This continuous display is provided by 20 projectors. It is used in a wide range of sectors such as architecture, history, construction, design, automotive, aerospace, or energy.

https://steantycip.com/projects/virtual-reality-tore/

Salles immersives – ENSIIE

Evry, France

The engineering school ENSIIE has acquired 2 CAVEs, one composed of 4 faces supported by a self-supporting structure and the second of 2 faces, which was installed so that students can learn to develop and integrate solutions on this type of platform.

https://indus.scale1portal.com/fr/ensiie-installation-de-deux-salles-immersives/

IRIS – Renault

Guyancourt, France

The Renault Technocentre has several CAVEs (Cave Automatic Virtual Environment) in its engineering center, including one with five faces, a surface area of 3×3 meters, and a 4K resolution. The IRIS (Immersive Room and Interactive System) has a computing power of 20 teraflops and can accommodate up to a total of 7 people at the same time. These systems enable the projection and validation of the designs of the brand’s future vehicles.

I-Space- Stellantis

Vélizy, France

The Vélizy center, Automotive design network, carries out digital design activities. In this space, there is a three-faced Holo Space and a five-faced CAVE. The CAVE is a three-meter cube and can accommodate car simulators to test 1:1 scale designs of vehicles under development.

Translife – UTC

Compiègne, France

TRANSLIFE is a CAVE belonging to the Heudiasyc laboratory of the University of Technology of Compiègne, with 4 faces that can be reconfigured to 3 open faces. It is based on 4 full HD 3D projectors and TransOne software tools to develop compatible VR applications.

https://www.hds.utc.fr/en/research/plateformes-technologiques/systemes-collaboratifs.html

MOVE – vrbnb

Lille, France

Located in the Euratechnology park in Lille, MOVE is a 4-faced CAVE measuring 3.7 meters on each side and 2.3 meters in height. It can also be arranged in an “L” shape or as a giant 11-meter screen.

https://www.vr-bnb.com/fr/listing/513-salle-immersive-cave-move

YViews – Naval Group

Laval, France

Naval Group has acquired several Yview CAVEs, 3-faced immersive rooms produced by TechViz. Each face has a display through a 4K HFR projector, and the CAVE is used to validate designs before production.

https://www.usine-digitale.fr/editorial/chez-dcns-la-realite-virtuelle-s-invite-partout.N321521

Salle immersive – EDF

Saclay, France

Installed by Scale-1 Portal, this immersive self-supporting structure system has 4 display faces. The latter is used for the training of EDF employees.

https://indus.scale1portal.com/fr/edf-salle-immersive/

Système immersif portable – Scale-1 Portal

France

Showcased at the Laval Virtual Center in 2015, this 2-faced CAVE with a projection surface of up to 3×3 meters is portable technology. Indeed, it fits in two suitcases and can be set up in minutes to present 3D environments without technical operators.

https://indus.scale1portal.com/fr/material-immersif/

PRESAGE 2 – CEA-List

Marcoules, France

Completed in 2018, this 5-faced CAVE with a 4K resolution and a total surface area of 19 square meters has haptic feedback arms (Haption’s Scale 1) and 8 motion capture cameras. This allows it to be used for virtual prototyping, workstation diagnostics, preparation and validation of assembly processes, collaboration support, as well as training.

CAVE – Technocampus Smart Factory

Saint-Nazaire, France

This 3-meter by 3-meter CAVE has 5 faces and 10 projectors, powered by 13 computers to provide optimal immersion for users in the industrial sector.

Immersia – Irisa-Inria

Rennes, France

In the “ImmerSTAR” laboratory in partnership with CNRS and M2S lab, there is the Immersia CAVE, a device with 4 rectangular faces measuring 12 by 4 meters and 3 meters high. It has a display frequency of 120 Hz and a tracking system via 12 cameras that allows for precise motion capture across the entire surface.

https://www.irisa.fr/immersia/

CAVE – CERV

Brest, France

This CAVE, operated by the CERV, which brings together laboratories, companies and students in a multidisciplinary context, consists of 4 faces with a surface area of 4 by 3 meters. There is one projector per face and it has a high resolution.

https://cerv.enib.fr/equipements/

CAVE – CRVM

Marseille, France

This CAVE, operated by the Mediterranean Research Center, consists of 4 modular screens. The two lateral screens are mobile and can be arranged in a classic cube around the user for complete immersion or unfolded for a 9-meter-long display.

https://irfm.cea.fr/Phocea/Vie_des_labos/Ast/ast.php?t=fait_marquant&id_ast=406

VIKI-CAVE – CEA

Gif-sur-Yvette, France

Located at CEA Saclay Moulon in Gif-sur-Yvette, there is a 5-sided immersive CAVE (3 walls, floor and ceiling, 3.20m x 2m, height: 2.95m). It can be equipped with 2 force-feedback arms (Haption Virtuose 6D with a gripper), all mounted on a motorized SCALE-1 platform.

https://irfm.cea.fr/Phocea/Vie_des_labos/Ast/ast.php?t=fait_marquant&id_ast=406

EVE-Room – LISN

Orsay, France

The CAVE located at LISN (Interdisciplinary Laboratory of Digital Sciences) is a reconfigurable immersive room with four sides, with the ability to accommodate multiple users and capture their movements. The CAVE is 5 meters high and has a 13-square-meter glass floor that is fully rear-projected. It is equipped with a six-degree-of-freedom haptic system that covers the entire workspace (Scale One, Haption), as well as 3D audio features, voice and gesture recognition, and a telepresence system.

https://www.lri.fr/~mbl/CONTINUUM/plateformes.html

Angul-R – Grenoble INP – UGA

Grenoble, France

The Angul-R is part of the Vision-R platform, featuring a 3-faced CAVE that measures 4 meters in height and 3.40 meters in length. Manufactured by Antycip, this system utilizes a cluster of PCs to deliver a pixel size of 1.2 millimeters, creating an immersive virtual reality experience.

https://www.grenoble-inp.fr/en/research/angul-r-a-new-virtual-reality-cave-on-the-vision-r-platform

Germany

CAVE – BMW

Munich, Germany

BMW has a 4-sided CAVE for its design activities. Cubic in shape with a length of 2.50 meters per side, it is operated by a Silicon Graphics Onyx2 computer with 2 IR graphics pipes and 8 CPUs. Interactions are made using two VO devices of the joystick steering wheel type.

https://link.springer.com/chapter/10.1007/978-3-7091-6785-4_21

CAVE – Daimler

Sindelfingen, Germany

Daimler has a 5-sided CAVE in its virtual reality center, made by Barco. It has 4 cameras and 4 infrared sensors to analyze the user’s movements. It is used with a haptic feedback device and finger tracking to interact with the environment.

https://ar-tracking.com/en/solutions/case-studies/automotive-industry/daimler-sindelfingen-germany

Cave – Audi

Germany

Audi has a CAVE used in the stages of automotive design. Interactions are managed by bracelets that capture the muscle movements of the users’ arms.

United Kingdoms

VR cave – Huddersfield University

Huddersfield, UK

The University of Huddersfield has a wide range of immersive technologies available through its Phidias Lab. On its premises, there is a 3-sided CAVE with 4K image resolution and the ability to display 3D content. Eye-tracking can be used to improve calibration and image sharpness. It provides students from various academic disciplines the opportunity to develop their design concepts without the need for potentially expensive physical models or printed documents.

https://steantycip.com/projects/vr-cave-for-huddersfield-university/

VR cave – Bath University

Bath, UK

Inaugurated at the University of Bath in 2019, this 3×4 meter installation consists of images projected onto the walls of a room combined with motion tracking glasses to provide a virtual reality experience. The projectors are located outside the room and the optics pass through dedicated spaces in the ceiling. This configuration allows for images to be displayed on “virtual windows” and thus immerse users in a room of a building or a larger environment.

https://steantycip.com/projects/vr-cave-bath-university/

Hiker pedestrian VR cave – Leeds University

Leeds, UK

This 4×9 meter CAVE features 4k UHD image quality thanks to laser F90 projectors. In addition to screens and projectors, the HIKER laboratory required a VICON tracking camera network with a set of reflective markers on the whole body, a equipment rack with dedicated PC image generators, and a VIOSO camera-based auto-calibration system. This device allows for long-distance movement and is used to study pedestrian behavior with vehicles.

https://steantycip.com/projects/hiker-pedestrian-cave/

Multiview VR Cave – Oxford Brookes University

Oxford, UK

This CAVE has a resolution of 3840 x 2400 pixels at 120 Hz and allows for two modes: a single-user mode that enables one user to enjoy the full resolution of the system, and a “multiview” mode that allows for the display to be split for two tracked people, enabling them to have a different image corresponding to their position.

https://steantycip.com/projects/uks-first-multiview-vr-cave/

Cave – Manufacturing Technology Centre (MTC)

Coventry, UK

Produced by HoloVis, the virtual immersion room has 4 faces arranged in a square of 4.5 meters in length. The display resolution is 2K and the display frequency is 120 Hz. The CAVE is currently used for prototyping.

United States of America

VR Cave – University of Central Florida

Orlando, USA

This 4-sided CAVE with a size of 3×3 meters has a resolution of 2k and a refresh rate close to the millisecond to display realistic interactions. This is made possible by 36 computing units that operate the system.

https://www.inparkmagazine.com/a-meeting-of-minds-and-disciplines/

3-D Cave – Ford

Dearborn, USA

Ford has several CAVEs, including the one in Dearborn which is a 4-sided immersive room (3 sides and the ceiling). A seat can be installed to analyze the interior from the driver’s perspective. It is mainly used for automotive design visualization.

https://www.inparkmagazine.com/a-meeting-of-minds-and-disciplines/

Cave2 – University of Illinois

Chicago, USA

This CAVE, which includes high-resolution curved LCD screens in order to eliminate spatial distortions caused by axis problems, allows for circular visualization of environments. Infrared sensor systems are placed on the structure’s ceiling to track users.

https://www.inparkmagazine.com/a-meeting-of-minds-and-disciplines/

StarCave – University of California

San Diego, USA

This CAVE, with 5 faces arranged in a pentagon shape and a floor, has 16 screens (3 in height per side and one on the floor), each equipped with two 2k resolution projectors. The device can be opened for collective use or closed for a fully immersive 360-degree experience. The display is managed via a rack of graphics cards operating through internal CalVR software.

https://chei.ucsd.edu/starcave/

Immersive studies Cave- Villanova University

Radnor Township, USA

This CAVE has 4 faces arranged in a rectangular shape. With a surface area of 5.5 x 3 meters, it can accommodate up to 20 people at the same time. The top screen can be moved up to have 3 faces and a floor or 3 faces and a ceiling. It was designed to work with a robot (Seemore) designed at Villanova which carries a network of spherical immersive video cameras to capture video covering 360 degrees horizontally and 280 degrees vertically. This video can be played back and interactively controlled in the CAVE.

https://www1.villanova.edu/villanova/artsci/ceet/cave.html

Combined Arms Virtual Environment (CAVE) – U.S. Navy

San Diego, USA

This CAVE is made up of a partially domed curved screen. It has a surface area of 5 meters and a 240° field of vision, allowing for real-time computer-driven simulation visuals to be displayed. It belongs to the US military and is used for training soldiers.

https://mvrsimulation.com/casestudies/jtac-CAVE.html

Cave C6 – Iowa State University

Iowa, USA

This CAVE is a cube with 3-meter sides and has 6 faces. Created in 2000, it has since undergone numerous hardware updates and now has 100 million pixels shared among the faces. It allows for total immersion from all angles once the door is closed and is used in various training projects and domains.

Japan

Immersive VR System – Japan Atomic Energy Agency

Nahara, Japan

Operated at the Nahara Atomic Energy Center, this CAVE is made up of 4 rectangular faces with a surface area of 3.6 meters by 2.25 meters. The display is managed by 5 4K laser projectors. It has a brightness of 15,000 lumens and a 4K display.

https://www.barco.com/fr/customer-stories/2021/q3/japan-atomic-energy-agency-naraha-center

Synra Dome – Tokyo science Museum

Tokyo, Japan

The Tokyo Museum has a CAVE projection dome that can display 3D images to viewers. It can adapt to users in real time. The display is managed by 6 projectors and has an HD display.

https://www.barco.com/fr/customer-stories/2018/q2/2018-06-13-science%20museum%20in%20jp

Slovakia

Virtual Cave – Technical University of Kosice

Kosice, Slovakia

This high-resolution CAVE is based on several straight screens arranged in a curved shape with mirrors on the floor and ceiling. Its half-circle shape allows a person to be immersed with a high degree of peripheral vision while remaining compact.

4. Market analysis and obstacles to overcome

The mixed reality market was valued at USD 376.1 million in 2020 and is expected to reach a value of USD 3.915 billion by 2026 with a projected CAGR of 41.8% over this period (Statista).

The democratization of VR headsets as we know them today dates back to 2016, when the first Oculus Rift headset was released. With a field of view of 90° and working via a computer, the headset costs a few hundred euros. Since then, several electronics brands have taken up the technology with the goal of making headsets accessible to as many people as possible with affordable prices.

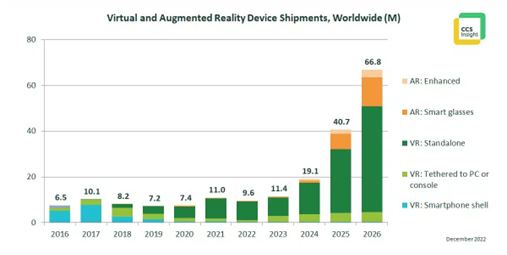

The annual sales of VR devices in 2022 amounted to 1.1 billion dollars.

Despite this, the number of mixed reality devices shipped in the same year is 9.6 million, according to CCS Insight. This is a decrease of 12% compared to 2021.

Figure 2 : Mixed reality device shipments Worldwide

This can be explained by a sharp drop in the number of Meta Quest 2 headsets sold, currently the cheapest on the market with a price of around 350 euros.

The market is heavily dependent on major manufacturers, their proprietary headsets, and associated content. Currently, the main players are Meta, HTC, Microsoft, Sony, and Google.

Sony released its new headset this year for 550 euros, which will to some extent expand the potential for video game use of virtual reality.

Apple is widely regarded as a significant driving force in the industry, and the long-awaited Apple Vision Pro, equipped with augmented reality capabilities, has generated considerable anticipation over the past few years. Featuring a detachable 2-hour battery and seamless integration with other Apple devices, it facilitates effortless collaboration and cross-platform functionality. The entrance of new competitors like Apple into the market has the potential to stimulate growth in both head-mounted display (HMD) sales and the availability of applications.

The applications for these devices are made with Real-time 3D software, Unity and Unreal Engine being the most popular and widely used options. Microsoft and Apple have selected Unity as their Developer Toolkit, enabling developers to create experiences from the ground up on their respective platforms. Other additional plug-ins, such as OpenXR or OculusVR, offer comprehensive integration and configuration of various immersive technologies and associated equipment, including joysticks, triggers, vibrators, screens, and more. As these software applications continue to advance and artificial intelligence tools come into play, the development process for creating new virtual reality experiences will become quicker and more cost-effective.

The VR gaming market is expected to reach USD 92 billion by 2027 with an annual growth rate of 30.2% (Statista). However, it remains predominantly used in the West, with 19% of Americans having used it last year, a figure that is much lower in other regions of the world. It is predicted that this decade, 23 million jobs will use VR or AR. The technology could inject USD 1.83 billion into the global economy very soon (CCS Insight).

Despite the fact that VR technology should increase in the near future, but there are still some struggles to find a place in mainstream use due to several obstacles.

There are two main barriers to the widespread acceptance of these technologies for the general public:

- Hardware limitations

- Cybersickness

4.1. Hardware limitations

Some VR headsets can be bulky, heavy, or poorly adjusted, which can lead to an uncomfortable experience, especially during prolonged use. Furthermore they are complex devices that requires an adaptation period.

Also to use a non-standalone VR headset, it is often necessary to have a powerful computer or a compatible gaming console. Additional hardware requirements, such as high-end graphics cards, can be a barrier for those who do not already own compatible equipment.

Users are waiting for ever more performant technology, with better resolution, wider field of view, better autonomy, lower weight for comfortable use, and a price accessible to everyone. This is one of the greatest challenges of this technology, but it will be met in the coming years through various advancements.

Right now, manufacturers need to find the balance between visual quality, battery life, responsiveness, price and headset ergonomics which is crucial for a comfortable and enjoyable VR experience. VR headset displays can utilize either OLED (Organic Light-Emitting Diode) or LCD (Liquid Crystal Display) technology, each with its trade-offs. OLED screens provide immersive visual experiences in virtual reality with vivid colors, high contrast, and faster response times. However, they can add weight to the VR headset. On the other hand, LCD screens, although less vibrant than OLED, are lighter and more energy-efficient, making them a suitable option for extended VR sessions.

Everyone, both in the professional world and in personal life, will be wearing connected glasses within a decade, and mixed reality will be used in all business processes and personal life. The added value of such technology does not have limits once everyone will accept it.

4.2. Cybersickness

Users of VR technology can be prone to cybersickness, which is a major factor in non-purchase. Simulator sickness (or cybersickness) is defined as an occurrence of symptoms similar to motion sickness, such as dizziness, headaches, nausea, and vertigo.

The most scientifically supported theory explaining this phenomenon is that it is caused by a sensory conflict. A difference in perception of our body is at the origin of cybersickness (Reason, 1978). This conflict in virtual reality is mainly characterized by a perceptual gap between the inner ear and the visual system.

It is worth noting that simulator sickness can vary from person to person, and some individuals may be more susceptible than others. Factors such as the duration of the virtual experience, the intensity of virtual movements, and individual sensitivity can also influence the likelihood of simulator sickness.

In this paragraph, we will focus on the different solutions and works addressing the technological aspect of this phenomenon and how to reduce it.

4.2.1. Driving simulators

According to the book “Getting Rid of Cybersickness”, automotive simulation and virtual reality are very similar in terms of their technologies (Kemeny et al., 2020).

Automotive simulators are used in various sectors, including design validation and testing of autonomous driving technologies. Until about twenty years ago, most studies on cybersickness were conducted on automotive simulators. Therefore, we will first examine research from this field.

The article “(Im)possibilities of studying car sickness in a driving simulator” highlights the differences between motion sickness and simulator sickness, and explains the latter through visual effects. Indeed, the perception of motion is greatly affected by vision, but it does not always coincide with physical movement in the case of simulator sickness (Bos et al., 2021).

Furthermore, the geometric transformation of a 3D environment into 2D (even stereoscopic) changes the perception of this space. The fact that images are projected at a fixed distance, even through Fresnel lenses, prevents our brain from perceiving depth. Thus, the main differences attributed are that the vision provided by digital systems on display screens does not correspond to reality, and that the prediction of motion made through vision does not correspond to the feeling in the inner ear.

Driving simulators can induce both motion sickness and cyber sickness due to the disparities between the simulation and reality (Baumann et al., 2021). In fact, accelerations are often simulated by rotations on the 3 rotation axes of the simulator, which can cause simulator sickness and has been shown to significantly increase results on the SSQ (questionnaire based on symptoms of cybersickness).

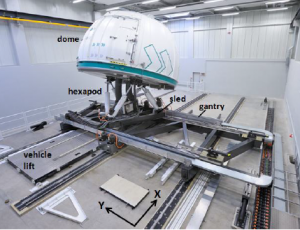

The most common solution is to allow translations through mobile bases on rails like the Stuttgart simulator. The simulator can move 7 to 10 meters on two axes and replace acceleration simulations with real acceleration.

Figure 3 : FKFS Stuttgart University Driving Simulator

Other causes mentioned in the article “Driving simulator studies for kinetosis-reducing control of active chassis systems in autonomous vehicles” of simulator sickness are the quality of the image, the distance to the user’s screen, or the latency between the driver’s actions and their responses, which is an important factor in the subject’s experience. These parameters cannot be changed within a proprietary headset or CAVE without an external device. Therefore, we will look at software methods to limit this phenomenon.

4.2.2. Caves

The user is not completely immersed in VR in a CAVE as he would be in an HMD (Kemeny et al., 2020). It is now known that this type of system induces much less simulator sickness. One of the main reasons for this, in addition to the higher image resolution of the projectors often superior to that of headsets, would be proprioception. Indeed, the user always sees their body when immersed in a CAVE and it is now known that this plays a crucial role in maintaining stability and compensating for visuo-vestibular conflict.

The study “The application of virtual reality technology to testing resistance to motion sickness” aimed to measure oculomotor characteristics, such as the number of eye blinks, fixations, and saccadic eye movements, during interaction with a virtual environment and compare them with the resistance to cybersickness symptoms (Menshikova et al., 2017). Thus, the results of the SSQ questionnaire coincide with strong oculomotor characteristics.

The paper suggests that the vestibular system can be “trained” and that some athletes, accustomed to using it, are less sensitive to simulator sickness than novices on average.

Based on this, with the democratization of VR devices, people should become less susceptible to these symptoms over time.

4.2.3. HMD

Simulator sickness is a widely researched field, but as mentioned before, it is still little applied in VR. It is estimated that between 65 and 90% of users may have experienced symptoms.

Figure 4 : Symptoms experienced in virtual reality according to Vishwakarma University

In an HMD, the movements of the real body are not directly taken into account, which can lead to sensory mismatch. This mismatch can cause simulator sickness issues in certain individuals, especially if the virtual movements do not correspond to the real movements. Furthermore, the screen display is only centimeters away from the user’s eyes and can cause additional discomfort.

Visualization Parameters

The most effective solution to reduce cybersickness is to coordinate vestibular and visual stimuli. However, this is not always possible due to material and/or physical constraints. However, their impact can be reduced through various strategies, including the reduction of different parameters. One common method to reduce this phenomenon in a virtual reality headset is to reduce ocular stimulation, in various ways such as reducing the field of view or brightness. The following papers will focus on reducing visual stimuli by applying effects to the virtual environment to compensate for the lack of vestibular stimuli.

Vection, or the feeling of motion, is caused by vision (Bardy et al., 1999). The problem is that it mostly occurs in VR, without actual movement. For example, moving in a virtual environment while staying still.

Research has shown that reducing the field of vision during movements, specifically the peripheral field, attenuates the feeling of motion and therefore reduces cybersickness.

It has been proven that peripheral vision has a much greater impact than central vision in the perception of motion, explained by the physiology of the eye but also the density of optical flows.

However, Field-of-View restrictions have a high cost in immersion and information provided to the user.

Another paper (Lin et al., 2020) uses the same principle as the previous study but employs another method of decreasing optical flow, which is blurring the peripheral vision to reduce simulator sickness. This process allows for the retention of some information and is thus preferable from a user’s standpoint, but the cost of such filters in hardware resources is significant and can, therefore, be inconvenient.

Furthermore, a higher image refresh rate, although it does not address the visuo-vestibular conflict, reduces the onset of symptoms.

The use of geometry simplification filters, mainly on vertical lines can also be used to reduce the self-motion perceived by the user (Lou, 2019).

Navigation controls

Navigation is the main creator of vection in the environment. In fact, movement in VR is often simulated by movement metaphors that can induce these symptoms.

The most commonly used navigation metaphor currently is a constant movement with little or no noticeable acceleration, based on the methods of movement in video games available on other platforms (Porcino et al., 2017).

The study “Minimizing cyber sickness in head mounted display systems: design guidelines and applications” suggests that adaptive navigation, with control based on acceleration and deceleration, can reduce cybersickness. The movement increases until it reaches a maximum speed and then decreases until a complete stop. In this case, we want the vection to be as similar as possible to natural human movement.

Another paper, “Development of a Speed Protector to Optimize User Experience in 3D Virtual Environments”, suggests the use of thresholds to impose constraints on speed, acceleration, and jerk, thereby limiting the occurrence of cybersickness symptoms. Vection protectors are proposed as a solution to prevent users from involuntarily generating false inputs in the locomotion system.

The paper “A discussion of cybersickness in virtual environments” presents a second technique for movement often used in VR applications, teleportation (LaViola et al., 2000).

Teleportation avoids vection and moves the user from position to position. It has been shown to be effective in reducing cybersickness. However, this method can cause a loss of orientation and reduces immersion as it is completely disparate from natural movement. Other factors of cybersickness, in addition to those mentioned earlier, are explained, including the observer’s eye height (which directly influences vection), visual integration, and low resolution.

The study “New VR Navigation Techniques to reduce cybersickness” examines navigation techniques in VR applications and provides an interesting analysis of rotation as a key factor in cybersickness (Kemeny et al., 2017). Regular rotation, which continuously rotates the head around an axis (usually the vertical axis), can significantly harm the user’s well-being in virtual reality.

One solution presented in this study is angular rotation, which is used in some VR experiences to reduce simulator sickness. With this technique, the rotation occurs in a jerky manner by a specific number of degrees, taking into account that the user can turn their head for precise rotation.

References

Le traité de la réalité virtuelle volume 1 – l’Homme et l’environnement virtuel, (2009). A Berthoz, J-L Vercher, P. Fuchs & G. Moreau, Mine Paris

Virtual Reality : Introduction. A. Ismail & J.S. Pillai, IDC, IIT Bombay

Former avec la réalité virtuelle, (2019). E.G. Mignot, B. Wolff, N. Kempf, M. Barabel & O. Meier, Dunod, LeLabRH

La réalité virtuelle : un média pour apprendre, (2001). D. M. d’Huart, AFPA- DEAT

Sculpting Cars in virtual Reality, (2015). M. Traverso, CarBodyDesign

Après audi, la start-up Holoride et son experience VR séduisent Ford, (2019). A. Vitard, L’USINE DIGITALE

Visualisation de données en AR et VR : interagir et interpréter les données autrement, (2020). R. Serge, realite-virtuelle.com

Réalité virtuelle pour visualiser un projet. Digilital Construction, Buildwise

XR collaboration beyond virtual reality: work in the real world (20211). Lee Y. & Yoo B. Journal of Computational Design and Engineering, Volume 8

Immersive Rooms and VR Cave: Unlock the Potential of VR for business, (2023). S. Lasserre, TechViz

Le marché des casques de réalité virtuelle dans une mauvaise passe, (2022). B. Terrasson, SiecleDigital.

Les mondes virtuels restent peu visités jusqu’à présent, (2022). T. Gaudiaut, Statista.

Chiffres VR : les statistiques-clés du secteur à connaître pour bien préparer 2021, (2021). R. Serge, realite-virtuelle.com

Investigation on Motion Sickness in Virtual Reality Environment from the Perspective of User Experience, (2020). C. Zhang, IEEE 3rd International Conference on Information Systems and Computer Aided Education (ICISCAE)

A systematic review of Cybersickness, (2014). Davis S., Nesbitt K. & Nalivaiko E., IE2014 : Proceedings of the 2014 Conference on Interactive Entertainment

Motion sickness adaptation: A neural mismatch model. Journal of the Royal, (1978). Reason J.T., Society of Medicine, 71, 819–829.

Getting Rid of Cybersickness in Virtual Reality, Augmented Reality, and Simulators, (2020). Kemeny A., Chardonnet J.R. & Colombet F., Springer

(Im)possibilities of studying car sickness in a driving simulator, (2021). Bos J. E., Nooij S.A.E. & Souman J.L., DSC Proceedings 2021

Driving simulator studies for kinetosis-reducing control of active chassis systems in autonomous vehicles, (2021). Baumann G, Jurisch M., Holzapfel C., Buck C. & Reuss H.C., DSC Proceedings 2021

The application of virtual reality technology to testing resistance to motion sickness, (2017). Menshikova G.Y., Kovalev A.I., Klimova O.A. & Barabanschikova V.V., Faculty of Psychology, Lomonosov Moscow State University

The role of central and peripheral vision in postural control during walking, (1999). Bardy B.G., Warren W.H. & Kay B.A., Percept Psychophis

How the presence and Size of Static Peripheral Blur Affects Cybersickness in Virtual Reality, (2020). Lin Y.X., Venkatakrishnan R., Ebrahimi E., Lin W.C. & Babu S.V., ACM Transactions on Applied Perception, Volume 17

Reducing Cybersickness by Geometry Deformation, (2019). Lou R. & Chardonnet J.R., IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Japon, IEEE Virtual Reality (VR)

Minimizing cyber sickness in head mounted display systems: design guidelines and applications, (2017). Porcino T.M., Clua E., Trevisan D, Vasconcelos C.N. & Valente L., Fluminense Federal University, Institute of Computing Brazil

Cybersickness in the presence of scene rotational movements along different axes, (2001). So R. & Lo. W., Hong Kong University of Science and Technology

Development of a speed protector to optimize user experience in 3D virtual environments, (2020). Wang Y., Chardonnet J.R. & Merienne F., Arts et Métiers Institute of Technology, LISPEN, HESAM Université

A discussion of cybersickness in virtual environments, (2000). LaViola J.J., ACM SIGCHI Bulletin, Volume 32

New VR Navigation Techniques to reduce cybersickness, (2017). Kemeny A., George P., Merienne F. & Colombet F., Laboratoire d’Ingénierie des Systèmes Physiques Et Numériques (LISPEN)